WHAT is principled-design?

Principled-design is a disciplined approach aimed at designing assessment systems while keeping in mind the inferences end users wish to make based on test scores. If assessment information (i.e., subscores, total scores, etc.) is expected to have value and usefulness for educators, then early in the design phase assessment developers must have a clear sense of how the test scores will be used to support inferences about teaching and learning. Principled-design is an approach to constructing assessments that ensures the evidence, and interpretations of evidence from the assessment, align with and support the intended claims, purposes, and uses of the assessment.

There are several aspects of a principled-design approach. The foundation of an effective principled-design approach is making the assessment argument clear. An assessment argument grounds the development process by outlining the logical steps taken to get from data (i.e., students’ assessment scores) to claims (i.e., interpretations of what those scores mean). To get from data to claims, assessment designers need to articulate the warrants or rationales that link students’ scores to the claims. The assessment argument articulates the logic—based on the domain of interest, the nature and types of tasks, and the ways in which the tests are scored—that informs the assessment development process, and ensures that each design step is carried out with the overall assessment purpose and intended use in mind.

A popular type of principled-design is referred to as Evidence-Centered Design (ECD). ECD is a framework that prompts developers to think about not just the assessment’s claims and their warrants, but also the entire assessment system design (Mislevy & Haertel, 2006). ECD is based on Messick’s (1994) questions:

- What constructs of knowledge, skills, or other attributes should be assessed?

- What behaviors or performances should reveal those constructs?

- What tasks or situations should elicit those behaviors?

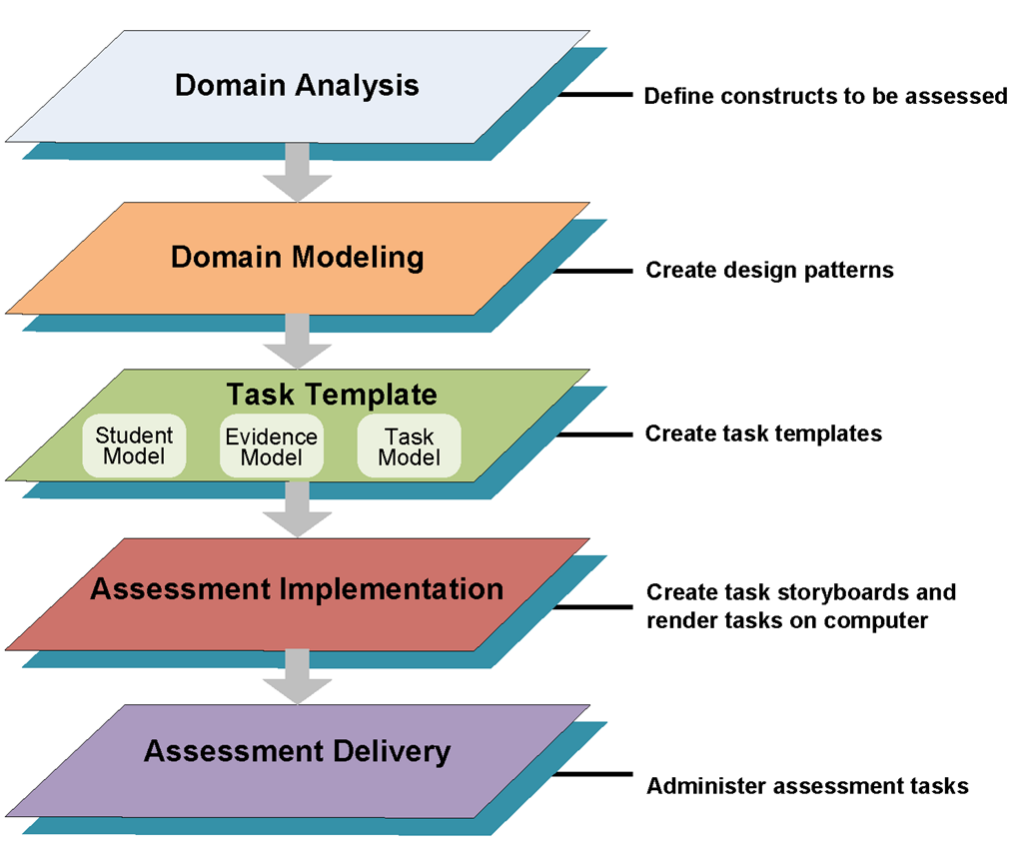

To implement ECD as part of a principled-design approach, these questions should be considered in relation to one another. The purpose of considering the relationships among these questions is to support assessment validity – that is, the degree to which the assessment accurately measures what it is intended to measure, and reliability – the degree to which the assessment yields consistent results. ECD considers all of an assessment system’s layers, from domain analysis and modeling (i.e., the content knowledge and skills being assessed), to the conceptual assessment framework development (i.e., the assessment design structure), to assessment implementation and delivery (see Exhibit 1).

As developers move from conceptualization to development, they can use two main tools: design patterns, which provide a narrative overview for the assessment, and task templates, which provide specific information for task development. Used together in the context of the assessment argument and the ECD framework elements, these tools assist developers in clearly articulating each design component and aligning them with each other, and documenting the logic behind each development decision for future use. The literature and best practices of Assessment Engineering (AE) provide further support and guidance for this type of approach. Applying AE entails the use of construct maps, evidence models and cognitive task models, task templates, and statistical assurance mechanisms – all with the intention to develop assessments that adhere to intended assessment design and provide ample reliable and usable data for assessment stakeholders. Both AE and ECD are frameworks resulting from data-driven progress and innovations in the fields of assessment design, development, implementation, and scoring (Luecht, 2008). A key strength of ECD and AE is that these approaches can be applied to multiple stages, or “layers,” of an assessment enterprise to ensure coherence and quality throughout the entire system.

WHY use a principled-design approach to develop assessment programs?

All assessments are designed with a purpose in mind, and only by identifying and clarifying these purpose, or set of purposes, can one begin to determine how to evaluate the validity of the interpretations of the scores an assessment yields. Reliability is a characteristic of scores, scorers, and decisions, while validity relates to how assessment scores are interpreted and used. Validity and reliability depend entirely on how the assessments were designed, built, administered, scored, and reported. Protecting the validity and reliability of assessment scores requires great care in the entire set of decisions from establishing a clear purpose, through assessment design and development, through all aspects of administration, scoring, and reporting.

Using a principled-design approach for the assessment development process helps ensure that the assessment yields valid and reliable results, and that the assessment is capable of meeting and surpassing federal accountability standards. Because this approach to development centers around the assessment purpose and intended use of scores, alignment and accessibility are built into the assessment from the beginning. Rather than focusing on a single theory or set of standards, principled-design focuses on the end goal and uses for the assessment, leaving space for the integration of multiple frameworks and perspectives (e.g., Universal Design for Learning (UDL), Evidence-Centered Design (ECD), and Social and Emotional Learning (SEL)). The principled-design approach also provides stakeholders with ample documentation of design and development logic and decisions, which can be used for future learning, evaluations, and development projects.

HOW does one use a principled-design approach to develop and evaluate assessment systems?

Just as teachers must engage sophisticated critical thinking and clarity of focus and purpose as they design curriculum, lessons, activities, and classroom assessments, so must assessment developers as they design both assessments and each individual item that makes up those assessments. Good assessment design and good instructional design are not separate concepts, or even associated concepts connected via a thin tether of standards. They draw from the same core and should operate with similar grain sizes.

Exhibit 1. Application of SRI’s Evidence-Centered Design Approach

As outlined in Exhibit 1, the ECD approach addresses every layer of an assessment system, providing opportunities for assessment developers and other stakeholders to ask important questions about quality, alignment, validity, and usefulness at every stage of assessment development, implementation, and evaluation. Applying the ECD approach to every layer, from Domain Analysis to Assessment Delivery, ensures that an assessment system is cohesive and aligned with the assessment’s main purpose and intended use.

Applying ECD to the Domain Analysis layer entails an articulation of what information is important in that domain and how that information is learned, all within the context of its direct implication on the assessment. The key aspects of the domain, once defined in the Domain Analysis layer, are then organized and structured in the Domain Modeling layer. This can be accomplished through the use of design patterns, which help developers represent the domain content in terms of the overall assessment argument. The Conceptual Assessment Framework layer builds on the Domain Modeling layer by continuing to organize and structure domain content in terms of the assessment argument, but moving more towards the mechanical details required to develop and implement an operational assessment. In this layer, developers utilize task templates and other development resources (e.g., psychometric models, simulation environments) (Mislevy & Riconscente, 2006).

Once developers have defined the domain content and organized it in terms of both the overall assessment argument and the assessment design and structure, the information can then be used to generate tasks and items in the Assessment Implementation layer. In this layer, the assessment pieces are manufactured using the information and resources developed in the previous layers. This involves activities such as authoring items and tasks and using simulation environments. Finally, students interact with the assessment in the Assessment Delivery layer. Out of this student interaction comes valuable data and feedback that can be used to evaluate the assessment system performance in terms of the assessment argument (Mislevy & Riconscente, 2006).

References

Luecht, R. M. (2008, November). Assessment engineering in test design, development, and scoring. Presentation at the East Coast Organization of Language Testers (ECOLT) Conference.

Messick, S. (1994). The interplay of evidence and consequences in the validation of performance assessments. Educational Researcher, 23(2), 13-23.

Mislevy, R. J. & Haertel, G. D. (2006). Implications of evidence-centered design for educational testing. Educational Measurement: Issues & Practice, 25(4), 6-20.

Mislevy, R. J. & Riconscente, M. M. (2006). Evidence-centered assessment design: Layers, concepts, and terminology. In S. Downing & T. Haladyna (Eds.), Handbook of Test Development (pp. 61-90). Mahwah, NJ: Erlbaum.